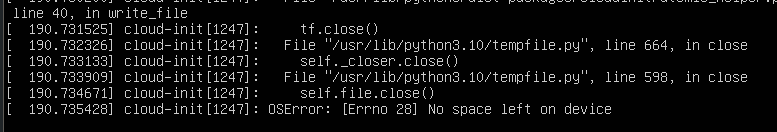

I have run out of space multiple times on this VM, and while I thought I gave it more space, I clearly did something wrong. The services won’t load which has been to this issue in the past.

The host shows this:

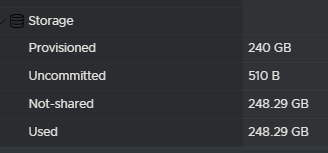

In ESXi I increased the drive to 240 GB but that did not work:

You can see that that ubuntu--vg-ubuntu--lv is at 100% and also is only at 58G.

$ df -h

Filesystem Size Used Avail Use% Mounted on

tmpfs 794M 79M 716M 10% /run

/dev/mapper/ubuntu--vg-ubuntu--lv 58G 58G 0 100% /

tmpfs 3.9G 0 3.9G 0% /dev/shm

tmpfs 5.0M 0 5.0M 0% /run/lock

/dev/sda2 2.0G 245M 1.6G 14% /boot

tmpfs 794M 4.0K 794M 1% /run/user/1000

alex@ubserv:/dev/mapper$So what is ubuntu--vg-ubuntu--lv ??? Let’s answer this thanks to AI./dev/mapper/ubuntu--vg-ubuntu--lv is the device-mapper path for a logical volume managed by LVM (Logical Volume Manager) on a Linux system. Here’s what each part means:/dev/mapper/: This is where device-mapper and LVM create block device files for logical volumes.ubuntu--vg: This is the Volume Group (VG) name. In your case, it’s called ubuntu-vg, but dashes are escaped as -- in this path.ubuntu--lv: This is the Logical Volume (LV) name, here called ubuntu-lv.

So: /dev/mapper/ubuntu--vg-ubuntu--lv

is the same logical volume as:/dev/ubuntu-vg/ubuntu-lv

They both point to the same device; it’s just a different naming convention.

Let’s try to fix this.

Run lsblk to see what’s available:

$ lsblk /dev/sda

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

sda 8:0 0 240G 0 disk

├─sda1 8:1 0 1M 0 part

├─sda2 8:2 0 2G 0 part /boot

└─sda3 8:3 0 118G 0 part

└─ubuntu--vg-ubuntu--lv 253:0 0 59G 0 lvm /We can see here that 240G is available but the logical volume is stuck at 59G (why it’s a gig more than what was shown before, I don’t know but am not too worried about that right now. These are the commands I ran:

$sudo parted /dev/sda

GNU Parted 3.4

Using /dev/sda

Welcome to GNU Parted! Type 'help' to view a list of commands.

(parted) print

Model: VMware Virtual disk (scsi)

Disk /dev/sda: 258GB

Sector size (logical/physical): 512B/512B

Partition Table: gpt

Disk Flags:

Number Start End Size File system Name Flags

1 1049kB 2097kB 1049kB bios_grub

2 2097kB 2150MB 2147MB ext4

3 2150MB 129GB 127GB

(parted) resizepart 3

End? [129GB]? 240G

(parted) quit

Information: You may need to update /etc/fstab.The next step is to run sudo pvresize /dev/sda3 (which tells LVM to rescan /dev/sda3 and recognize the newly added space inside that partition). However, this comes back with an error saying:

/etc/lvm/archive/.lvm_ubserv_27426_1200540192: write error failed: No space left on device

0 physical volume(s) resized or updated / 1 physical volume(s) not resizedWe need to clear up some space for this to run:

sudo apt clean

sudo journalctl --vacuum-time=2dapt clean did almost nothing and the journal clearning cleared up 874M from /var/log/journal/

Now, running the command was successful:

$ sudo pvresize /dev/sda3

Physical volume "/dev/sda3" changed

1 physical volume(s) resized or updated / 0 physical volume(s) not resizedSo we resized the physical volume, and now need to extend the logical voume by doing this:

$ sudo lvextend -l +100%FREE /dev/ubuntu-vg/ubuntu-lv

Size of logical volume ubuntu-vg/ubuntu-lv changed from <59.00 GiB (15103 extents) to 221.51 GiB (56707 extents).

Logical volume ubuntu-vg/ubuntu-lv successfully resized.Finally we need to resize the filesystem. Since it’s ext4 we can run this:

$ sudo resize2fs /dev/ubuntu-vg/ubuntu-lv

resize2fs 1.46.5 (30-Dec-2021)

Filesystem at /dev/ubuntu-vg/ubuntu-lv is mounted on /; on-line resizing required

old_desc_blocks = 8, new_desc_blocks = 28

The filesystem on /dev/ubuntu-vg/ubuntu-lv is now 58067968 (4k) blocks long.And we can verify with this:

$ df -h

Filesystem Size Used Avail Use% Mounted on

tmpfs 794M 79M 716M 10% /run

/dev/mapper/ubuntu--vg-ubuntu--lv 218G 57G 152G 28% /

tmpfs 3.9G 0 3.9G 0% /dev/shm

tmpfs 5.0M 0 5.0M 0% /run/lock

/dev/sda2 2.0G 245M 1.6G 14% /boot

tmpfs 794M 4.0K 794M 1% /run/user/1000I rebooted the server after this with sudo reboot

Now I can troubleshoot with a bit more room to play. Upon reboot, all my containers and services started up successfully.

No responses yet